Memory

Silicon Motion announces new devices at Future of Memory and Storage summit 2025: PCIe 6.0 SSDs, 256 / 512 TB drives, and next-gen 16K LDPC

Silicon Motion’s program at the ongoing Future for Memory and Storage (FMS) conference was packed with announcements. The company offered a glimpse at controllers for PCIe 6.0 SSDs for consumer and data center applications, shared details on the evolution of its MonTitan SM8300-series PCIe Gen5 platforms for enterprise SSDs, and discussed next-generation Low Density Parity…

A Code Implementation to Build a Multi-Agent Research System with OpenAI Agents, Function Tools, Handoffs, and Session Memory

In this tutorial, we begin by showcasing the power of OpenAI Agents as the driving force behind our multi-agent research system. We set up our Colab environment with the OpenAI API key, installed the OpenAI Agents SDK, and then defined custom function tools, web_search, analyze_data, and save_research, to harness the agents’ capabilities. We instantiate three…

The Memory Magic Inside Your Devices

Memory helps devices run fast and smooth. Learn how new tech fixes errors, keeps data safe, and why better memory makes your gadgets work smarter every day. Memory modules are very important for computers and they define their performance. The memory modules are capable of storing and processing data which helps in execution of tasks,…

A Coding Guide to Build an Intelligent Conversational AI Agent with Agent Memory Using Cognee and Free Hugging Face Models

In this tutorial, we delve into building an advanced AI agent with agent memory using Cognee and Hugging Face models, utilizing entirely free, open-source tools that work seamlessly in Google Colab and other notebook. We configure Cognee for memory storage and retrieval, integrate a lightweight conversational model for generating responses, and bring it all together…

G.Skill Trident Z5 Neo RGB DDR5-6000 is the current top 96GB memory kit for AMD CPUs

Why you can trust Tom’s Hardware Our expert reviewers spend hours testing and comparing products and services so you can choose the best for you. Find out more about how we test. The Trident Z5 Neo RGB DDR5-6000 C26 can compete with the best RAM options available today. DDR5 has brought notable improvements in terms…

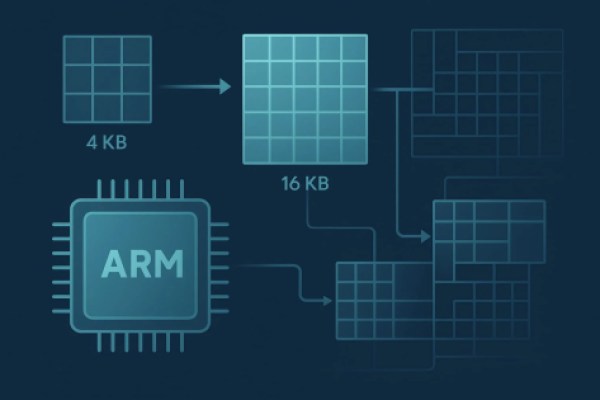

Understanding Memory Page Sizes on Arm64 — SitePoint

One of the ways that the Arm64 architecture is different from x86 is the ability to configure the size of memory pages in the Memory Management Unit (MMU) of the CPU to 4K, 16K, or 64K. This article summarizes what memory page size is, how to configure page size on Linux systems, and when it…

Dead RTX 5090 with a cracked PCB gets urgent surgery from repair wizard — tech casually reballs the core, replaces a memory chip twice, and runs more wires across its traces than the NSA

A broken RTX 5090 recently landed on the desk of Northwest Repairs, run by “Tony” who also serves as the face of their chaotic YouTube channel. NorthWest specializes in semiconductor work, particularly GPUs that need to be brought back from the dead. So, when a PNY RTX 5090 came in with a cracked PCB and…

ChatGPT’s Memory Limit Is Frustrating — The Brain Shows a Better Way

If you’re a ChatGPT power user, you may have recently encountered the dreaded “Memory is full” screen. This message appears when you hit the limit of ChatGPT’s saved memories, and it can be a significant hurdle during long-term projects. Memory is supposed to be a key feature for complex, ongoing tasks – you want your…

What is DRAM Memory? Understanding Its Role in RAM, SSDs & System Speed

Introduction With everyday tasks, computing has become an essential part of day-to-day life, and knowing how your device allocates memory is important, especially when considering performance. What is DRAM? Why is it a volatile memory? And what is DRAM in SSDs? We will answer all your queries with the help of this guide. If you…

Mem0: A Scalable Memory Architecture Enabling Persistent, Structured Recall for Long-Term AI Conversations Across Sessions

Large language models can generate fluent responses, emulate tone, and even follow complex instructions; however, they struggle to retain information across multiple sessions. This limitation becomes more pressing as LLMs are integrated into applications that require long-term engagement, such as personal assistance, health management, and tutoring. In real-life conversations, people recall preferences, infer behaviors, and…

- 1

- 2