When Nvidia began to disclose details about its new 4-bit floating point format — NVFP4 — earlier this year, it stated that while it is mainly designed for inference, it could also be used for AI training without significant loss in accuracy. Recently, the company released a paper describing how it managed to train a 12-billion-parameter model on a 10-trillion-token dataset using the NVFP4 format, with several supporting techniques, and achieved results that closely match those of an FP8 baseline.

Blackwell and NVFP4: A match made in heaven

Nvidia’s NVFP4 is a purpose-built 4-bit floating point format developed for the Blackwell GPU architecture that is aimed at improving efficiency of both training and inference tasks. It combines highly compact data representation with a multi-level scaling strategy, achieving accuracy close to BF16 while substantially lowering performance and memory requirements.

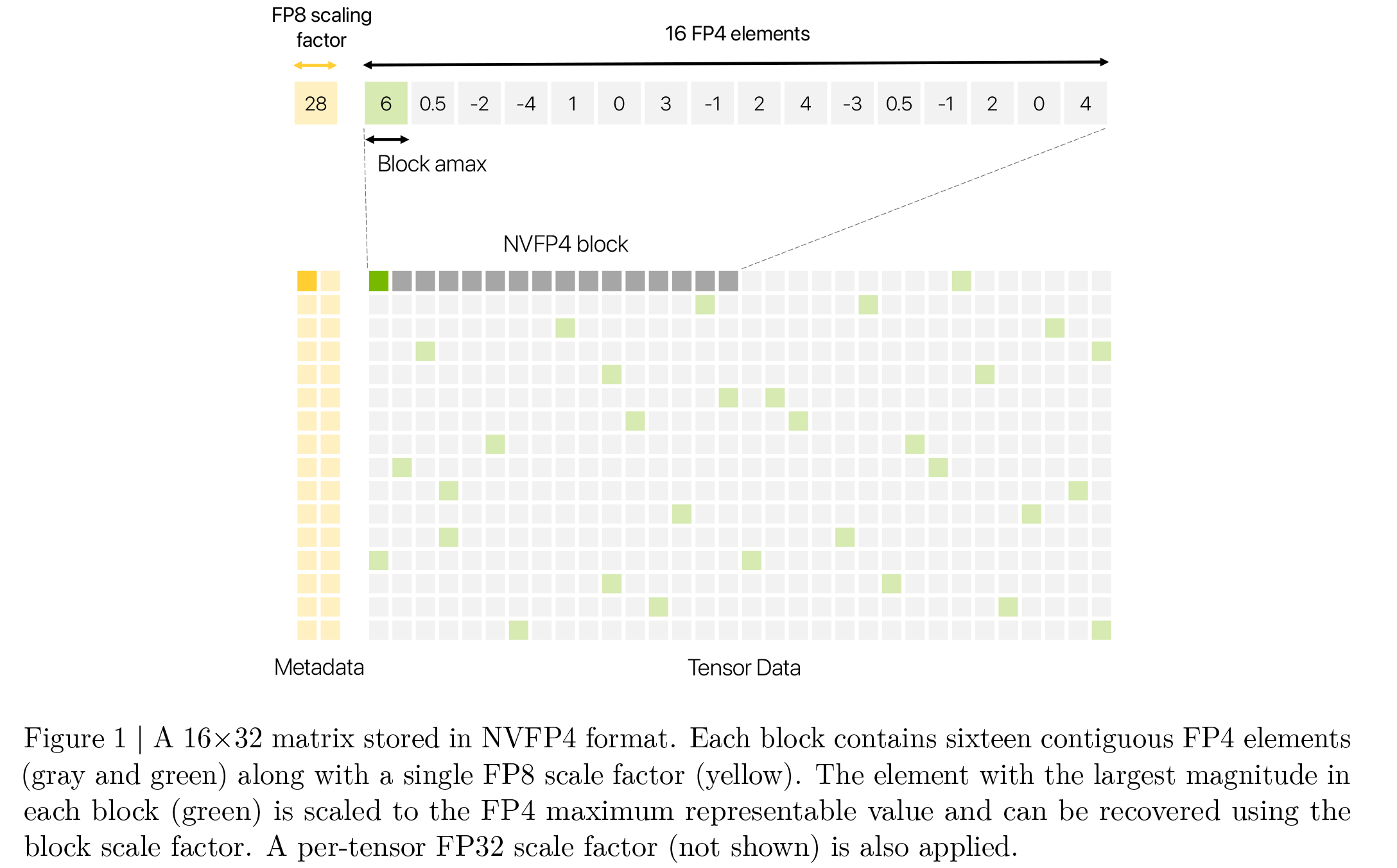

Structurally, NVFP4 adheres to the same E2M1 layout used in standard FP4 formats —consisting of 1 sign bit, 2 exponent bits, and 1 mantissa bit — giving it the ability to encode values approximately between −6 and +6. To overcome the inherently limited dynamic range of 4-bit formats, Nvidia introduces a hierarchical scaling mechanism: every 16-element block of FP4 values is assigned a dedicated scale factor stored in FP8 using the E4M3 layout, and in parallel, an FP32 scale factor is applied globally across the full tensor. Nvidia claims that this two-tier system keeps numerical noise low without losing performance efficiency that a 4-bit format has to offer.

Nvidia’s latest Blackwell GPUs feature tensor cores capable of performing general matrix multiplication (GEMM) in narrow formats such as MXFP8, MXFP6, MXFP4, and NVFP4. Blackwell tensor cores process GEMMs by first applying a scale factor to each block of input values, carrying out the dot-product computations in high precision, then accumulating the results with FP32-level accuracy, just the way NVFP4 is meant to be used.

These cores also support built-in rounding methods, including round-to-nearest-even and stochastic rounding, which are important for ensuring stable training when using low-precision formats like FP4. Nvidia says that NVFP4 operations achieve a 4X speed boost over BF16 on GB200 and up to 6X on GB300. Additionally, memory consumption is reduced by roughly half compared to FP8.

But can it be used for training?

Model setup and training approach

To evaluate NVFP4’s efficiency, Nvidia trained a 12-billion-parameter large language model based on a hybrid Mamba-Transformer architecture. This model architecture came from the Nemotron-H family and included a combination of Mamba-2 blocks, standard feed-forward layers, and self-attention modules. The training followed a warmup-stable-decay schedule: the learning rate remained constant through the first 80% of the run and was gradually reduced during the final 20%.

Note that NVFP4 can be applied to models like LLaMA, OpenAI’s GPT, and other Transformer-based LLMs; it is certainly not specific to the Mamba-Transformer architecture used in the demonstration. However, adapting it may require tuning the number of BF16 layers, validating block scaling choices, and performing quantization-aware training, if the model wasn’t originally designed for low-precision formats.

Each training sequence contained 8192 tokens, and the batch size was set to 736. The dataset used for training was a diverse blend of content types, including general internet text, programming code, mathematical problems, multilingual corpora, academic papers, and synthetic instruction-tuned samples. The blending was carried out in three phases to ensure balanced exposure to various data types over the course of training.

Accuracy compared to FP8

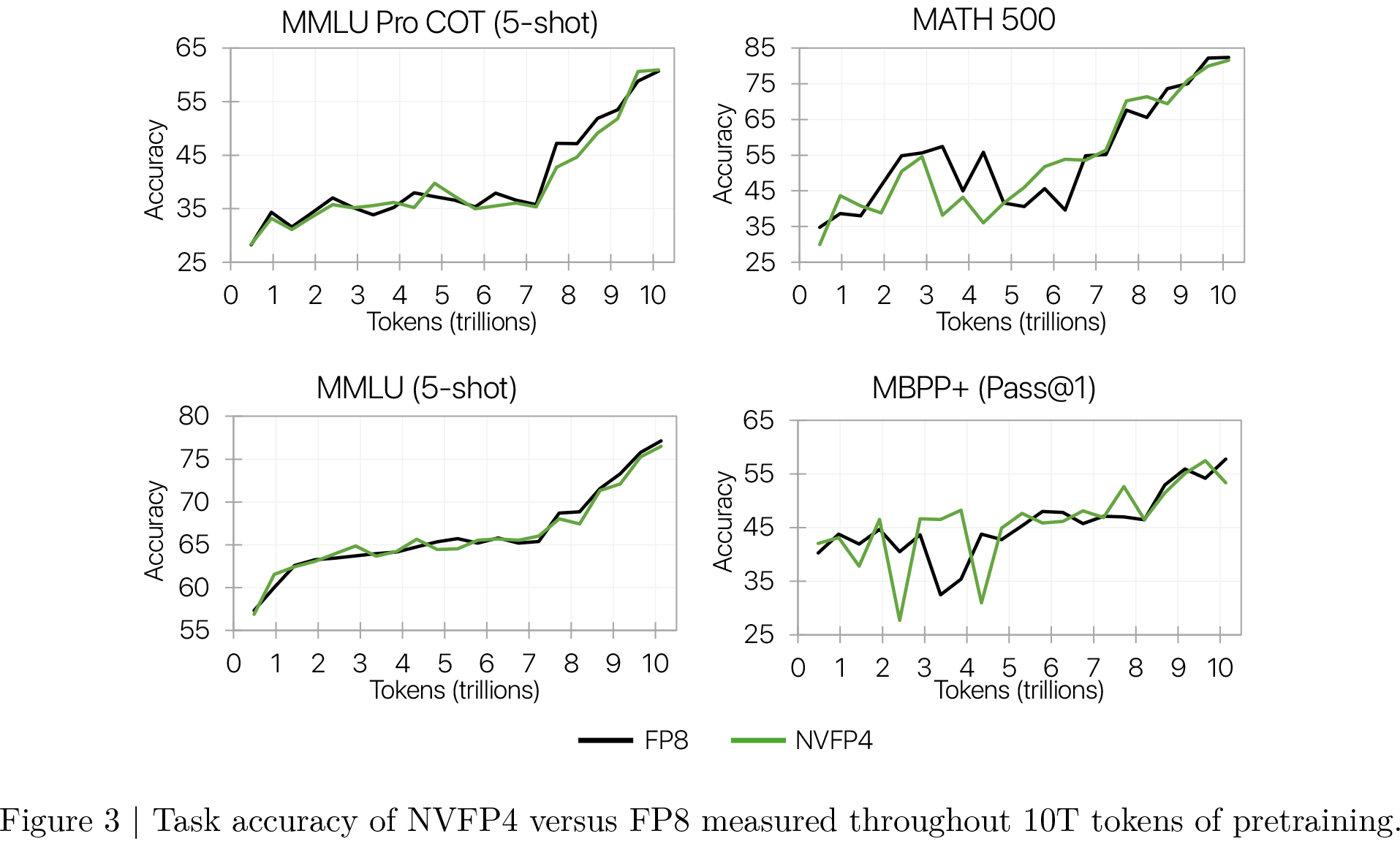

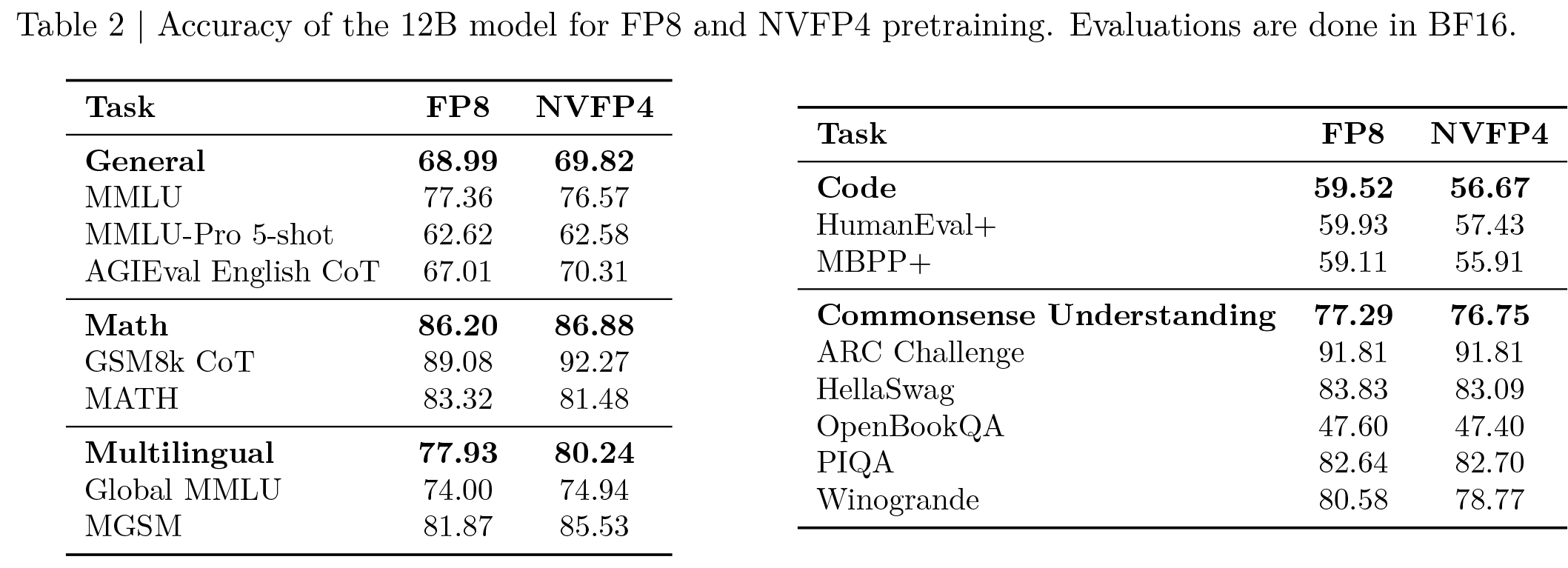

Throughout training, the model trained with NVFP4 tracked the FP8 baseline very closely in terms of validation loss. For most of the run, the gap in loss between NVFP4 and FP8 remained under 1%, rising just slightly above 1.5% near the end when the learning rate began to decay, according to Nvidia. This small increase in loss did not translate to a measurable drop in task accuracy, however.

NVFP4 achieved comparable results to FP8 across a wide range of downstream tasks, including common-sense reasoning, mathematics, knowledge-heavy questions, and multilingual benchmarks. For instance, NVFP4 reached 62.58% on the MMLU-Pro 5-shot benchmark, nearly matching the FP8 result of 62.62%. The only notable accuracy drop occurred in code-related tasks such as MBPP+ and HumanEval+, where NVFP4 lagged behind by a few percentage points. However, this inconsistency was attributed to normal checkpoint variability rather than a systemic flaw in the format.

Techniques for stable 4-bit training

Training large models with FP4 precision requires several adjustments to ensure stability and accuracy. One of the key strategies is keeping about 15% of the linear layers in BF16, mainly in the final blocks of the model. Using NVFP4 across all layers led to divergence, but Nvidia found that even retaining just the last four blocks in BF16 was enough for stable training, which implies that the BF16 footprint could be reduced further.

To maintain consistency between forward and backward passes (which use transposed weights), Nvidia used 2D block scaling for weights: weights were grouped into 16×16 blocks with a shared scale factor applied in both directions. For activations and gradients, finer 1×16 block scaling was used, which improved quantization accuracy without introducing instability.

To deal with outliers in gradients, Nvidia applied Random Hadamard Transforms to weight gradient (Wgrad) inputs to transform redistributed large values more evenly and make them easier to represent in FP4. However, such transforms were not applied to other tensor types, and a 16×16 matrix with a shared random sign vector was used across all layers.

Lastly, hardware-accelerated stochastic rounding was used for gradient quantization. This helped avoid rounding bias that can build up during training. Also, Nvidia applied it only to gradients, as using it on forward activations increased noise and degraded training quality.

Late-stage precision switching

In scenarios where minimizing final loss is crucial, Nvidia tested switching from NVFP4 to BF16 late in training. A switch made at 8.2 trillion tokens significantly reduced the gap in final loss. Switching only the forward path to BF16 had nearly the same effect as switching both forward and backward passes. To contrast, switching at 10 trillion tokens—near the end of training—had minimal impact due to the already low learning rate. These results suggest that limited use of high precision near the end of training can improve accuracy while preserving most of the efficiency benefits of FP4.

NVFP4 vs. MXFP4

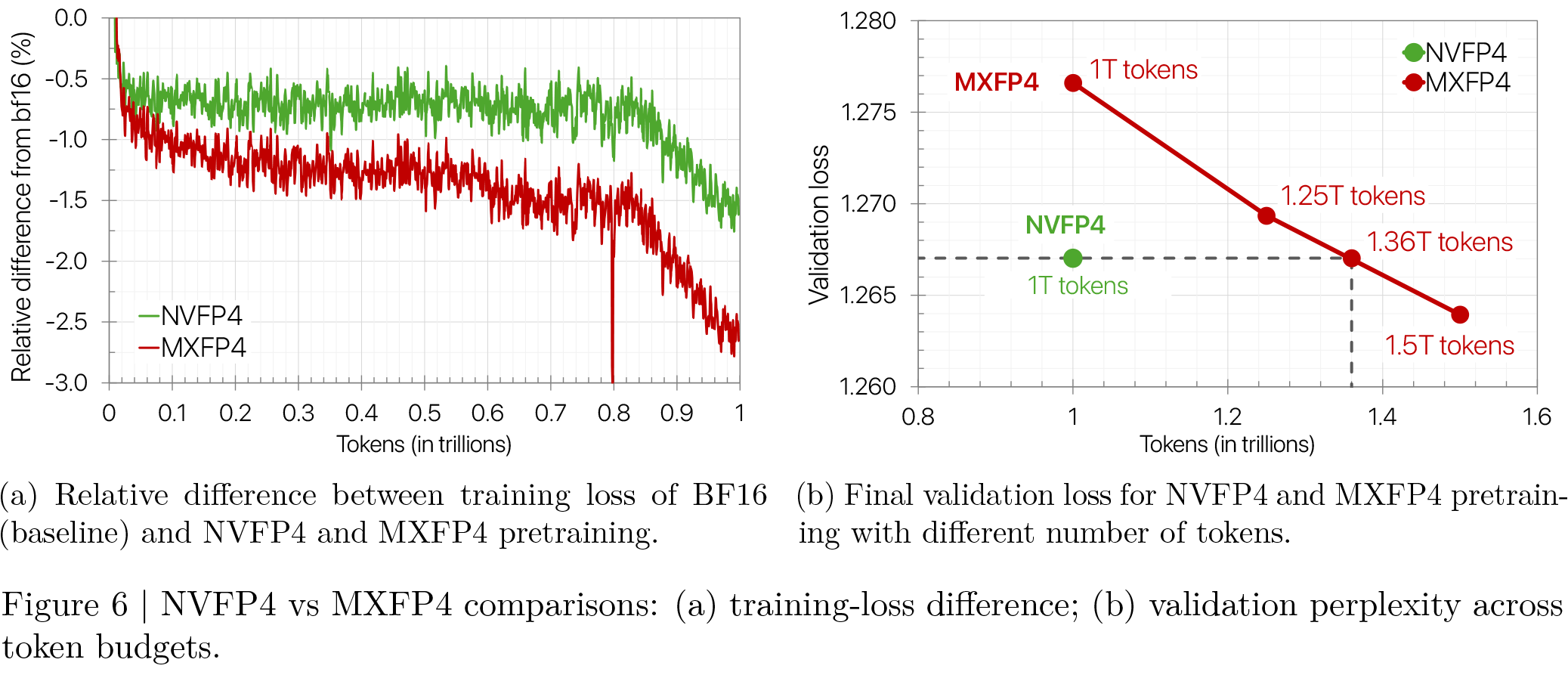

To compare NVFP4 against the more common MXFP4 format defined by the Open Compute Project (and supported by Huawei’s upcoming Ascend 950-series processors and onwards), Nvidia trained an 8-billion-parameter model using both formats on the same 1-trillion-token dataset. NVFP4 achieved a final loss approximately 1.5% above the BF16 reference, while MXFP4’s final loss was around 2.5% above. To match NVFP4’s final loss, the MXFP4 model needed 1.36 trillion tokens, or 36% more data.

AI training gets NVFP4 boon

Nvidia’s NVFP4 format enables accurate, stable, and efficient training of large-scale LLMs using 4-bit precision, according to the company’s own testing. By combining a more precise quantization format with techniques like mixed precision, consistent scaling, stochastic rounding, and outlier handling, the company successfully trained a frontier-class 12B model on a 10T-token set.

When compared to the MXFP4 format, NVFP4 outperforms it in both convergence and data efficiency.

Nvidia’s future work will focus on further reducing the number of high-precision layers, extending NVFP4 to more model components, and evaluating its effectiveness in larger models and alternative architectures.

Follow Tom’s Hardware on Google News, or add us as a preferred source, to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.