Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Just a week ago — on January 20, 2025 — Chinese AI startup DeepSeek unleashed a new, open-source AI model called R1 that might have initially been mistaken for one of the ever-growing masses of nearly interchangeable rivals that have sprung up since OpenAI debuted ChatGPT (powered by its own GPT-3.5 model, initially) more than two years ago.

But that quickly proved unfounded, as DeepSeek’s mobile app has in that short time rocketed up the charts of the Apple App Store in the U.S. to dethrone ChatGPT for the number one spot and caused a massive market correction as investors dumped stock in formerly hot computer chip makers such as Nvidia, whose graphics processing units (GPUs) have been in high demand for use in massive superclusters to train new AI models and serve them up to customers on an ongoing basis (a modality known as “inference.”)

Venture capitalist Marc Andreessen, echoing sentiments of other tech workers, wrote on the social network X last night: “Deepseek R1 is AI’s Sputnik moment,” comparing it to the pivotal October 1957 launch of the first artificial satellite in history, Sputnik 1, by the Soviet Union, which sparked the “space race” between that country and the U.S. to dominate space travel.

Sputnik’s launch galvanized the U.S. to invest heavily in research and development of spacecraft and rocketry. While it’s not a perfect analogy — heavy investment was not needed to create DeepSeek-R1, quite the contrary (more on this below) — it does seem to signify a major turning point in the global AI marketplace, as for the first time, an AI product from China has become the most popular in the world.

But before we jump on the DeepSeek hype train, let’s take a step back and examine the reality. As someone who has extensively used OpenAI’s ChatGPT — on both web and mobile platforms — and followed AI advancements closely, I believe that while DeepSeek-R1’s achievements are noteworthy, it’s not time to dismiss ChatGPT or U.S. AI investments just yet. And please note, I am not being paid by OpenAI to say this — I’ve never taken money from the company and don’t plan on it.

What DeepSeek-R1 does well

DeepSeek-R1 is part of a new generation of large “reasoning” models that do more than answer user queries: They reflect on their own analysis while they are producing a response, attempting to catch errors before serving them to the user.

And DeepSeek-R1 matches or surpasses OpenAI’s own reasoning model, o1, released in September 2024 initially only for ChatGPT Plus and Pro subscription users, in several areas.

For instance, on the MATH-500 benchmark, which assesses high-school-level mathematical problem-solving, DeepSeek-R1 achieved a 97.3% accuracy rate, slightly outperforming OpenAI o1’s 96.4%. In terms of coding capabilities, DeepSeek-R1 scored 49.2% on the SWE-bench Verified benchmark, edging out OpenAI o1’s 48.9%.

Moreover, financially, DeepSeek-R1 offers substantial cost savings. The model was developed with an investment of under $6 million, a fraction of the expenditure — estimated to be multiple billions —reportedly associated with training models like OpenAI’s o1.

DeepSeek was essentially forced to become more efficient with scarce and older GPUs thanks to a U.S. export restriction on the tech’s sales to China. Additionally, DeepSeek provides API access at $0.14 per million tokens, significantly undercutting OpenAI’s rate of $7.50 per million tokens.

DeepSeek-R1’s massive efficiency gain, cost savings and equivalent performance to the top U.S. AI model have caused Silicon Valley and the wider business community to freak out over what appears to be a complete upending of the AI market, geopolitics, and known economics of AI model training.

While DeepSeek’s gains are revolutionary, the pendulum is swinging too far toward it right now

There’s no denying that DeepSeek-R1’s cost-effectiveness is a significant achievement. But let’s not forget that DeepSeek itself owes much of its success to U.S. AI innovations, going back to the initial 2017 transformer architecture developed by Google AI researchers (which started the whole LLM craze).

DeepSeek-R1 was trained on synthetic data questions and answers and specifically, according to the paper released by its researchers, on the supervised fine-tuned “dataset of DeepSeek-V3,” the company’s previous (non-reasoning) model, which was found to have many indicators of being generated with OpenAI’s GPT-4o model itself!

It seems pretty clear-cut to say that without GPT-4o to provide this data, and without OpenAI’s own release of the first commercial reasoning model o1 back in September 2024, which created the category, DeepSeek-R1 would almost certainly not exist.

Furthermore, OpenAI’s success required vast amounts of GPU resources, paving the way for breakthroughs that DeepSeek has undoubtedly benefited from. The current investor panic about U.S. chip and AI companies feels premature and overblown.

ChatGPT’s vision and image generation capabilities are still hugely important and valuable in workplace and personal settings — DeepSeek-R1 doesn’t have any yet

While DeepSeek-R1 has impressed with its visible “chain of thought” reasoning — a kind of stream of consciousness wherein the model displays text as it analyzes the user’s prompt and seeks to answer it — and efficiency in text- and math-based workflows, it lacks several features that make ChatGPT a more robust and versatile tool today.

No image generation or vision capabilities

The official DeepSeek-R1 website and mobile app do let users upload photos and file attachments. But, they can only extract text from them using optical character recognition (OCR), one of the earliest computing technologies (dating back to 1959).

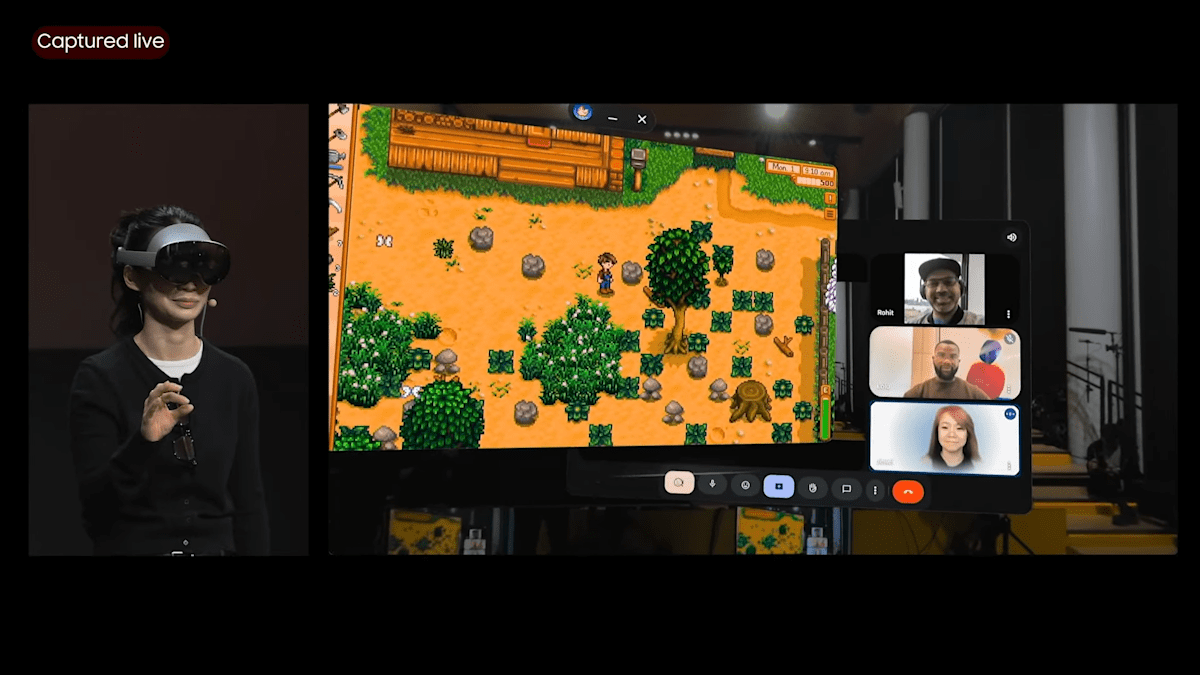

This pales in comparison to ChatGPT’s vision capabilities. A user can upload images without any text whatsoever and have ChatGPT analyze the image, describe it, or provide further information based on what it sees and the user’s text prompts.

ChatGPT allows users to upload photos and can analyze visual material and provide detailed insights or actionable advice. For example, when I needed guidance on repairing my bike or maintaining my air conditioning unit, ChatGPT’s ability to process images proved invaluable. DeepSeek-R1 simply cannot do this yet. See below for a visual comparison:

No image generation

The absence of generative image capabilities is another major limitation. As someone who frequently generates AI images using ChatGPT (such as for this article’s own header) powered by OpenAI’s underlying DALL·E 3 model, the ability to create detailed and stylistic images with ChatGPT is a game-changer.

This feature is essential for many creative and professional workflows, and DeepSeek has yet to demonstrate comparable functionality, though today the company did release an open-source vision model, Janus Pro, which it says outperforms DALL·E 3, Stable Diffusion 3 and other industry-leading image generation models on third-party benchmarks.

No voice mode

DeepSeek-R1 also lacks a voice interaction mode, a feature that has become increasingly important for accessibility and convenience. ChatGPT’s voice mode allows for natural, conversational interactions, making it a superior choice for hands-free use or for users with different accessibility needs.

Be excited for DeepSeek’s future potential — but also be wary of its challenges

Yes, DeepSeek-R1 can — and likely will — add voice and vision capabilities in the future. But doing so is no small feat.

Integrating image generation, vision analysis, and voice capabilities requires substantial development resources and, ironically, many of the same high-performance GPUs that investors are now undervaluing. Deploying these features effectively and in a user-friendly way is another challenge entirely.

DeepSeek-R1’s accomplishments are impressive and signal a promising shift in the global AI landscape. However, it’s crucial to keep the excitement in check. For now, ChatGPT remains the better-rounded and more capable product, offering a suite of features that DeepSeek simply cannot match. Let’s appreciate the advancements while recognizing the limitations and the continued importance of U.S. AI innovation and investment.

Source link